The state of the AI Agents ecosystem: The tech, use cases, and economics

In May, we discussed an impending shift in enterprise architectures toward AI Agents. In the six months since that article came out, we have monitored actual agentic deployments across companies, noticing the differences in use case complexity, the tradeoff of buying function-specific Agents versus building custom agentic workflows, and the variety in how value is measured and attributed.

We spoke to builders, enterprises, end users, and researchers to get a deeper understanding of where Agents stand today, what use cases are in production in enterprises, and to share key learnings to help builders and enterprises in their agentic journeys.

1. State of the AI Agent ecosystem

What is your definition of an Agent?

Often a fun conversation starter, the academic definition — software that can reason on a task and take action independently — captures the high-level aspiration of AI Agents. We think of Agents as a new architecture combining core application logic and associated workflow automation in a unified flow, embedding LLMs to interweave planning and execution of complex tasks.

Agents could be as simple as a single-task Agent, which combines an LLM with a specific tool or function. Multi-agent platforms address complex workflow by breaking down the task into distinct Agents and modules, which are orchestrated to deliver the required output.

Design considerations for AI Agents

Building Agents starts with a deep understanding of the task and user experience. While LLMs are a key part of the architecture, most builders use the foundation models where applicable and combine them with standard application elements, data and tool integrations, and APIs.

We share two key learnings and design tradeoffs from our conversations below:

1. User-in-the-loop: Developers working closely with users across the full agentic lifecycle

For Agents to move beyond experiments, developers are taking a consultative approach to iterating with users on design, deployment, and scaling in production environments. Challenges remain in Human-Agent communication; some best practices we have gleaned include:

- Building a clear understanding of user workflow trajectories to build diverse training examples and reward functions to optimize Agent performance and improve task planning

- Mapping out the required integrations and end-user data architecture to ground the Agent

- Developing good User evals, error handling, and feedback to train and fine-tune outputs

- Optimizing the user experience for output formats and tone in customer-facing use cases

- Building trust by generating audit trails and automation artifacts to align with policies

- Getting psychological and technical buy-in from users and the organization

2. Task planning (aka Reasoning): Human-defined task planning vs. LLM-derived planning

Translating user input into the task plan is a key design choice, with some choosing to define prescriptive user control flows for agentic execution versus others allowing LLMs to come up with plans. “Chain of Thought” reasoning models that combine reinforcement learning with forcing the model to consider multiple paths before picking the optimal one at inference time could be a game changer for agentic design. For simple bounded workflows, breaking down a task into a sequence that the LLM can memorize along with active feedback loops to fine-tune the model might work.

More complex workflows or applications could take a multi-agentic approach requiring either coded execution graphs LangGraph or multi-agent orchestration approaches like CrewAI, which can:

- Help developers and end users build Agents (with access to tools, APIs, and data sources)

- Orchestrate task flows and Agent interactions to drive clean handoffs and state maintenance

- Provide feedback loops and customizable UX to help optimize Agent performance

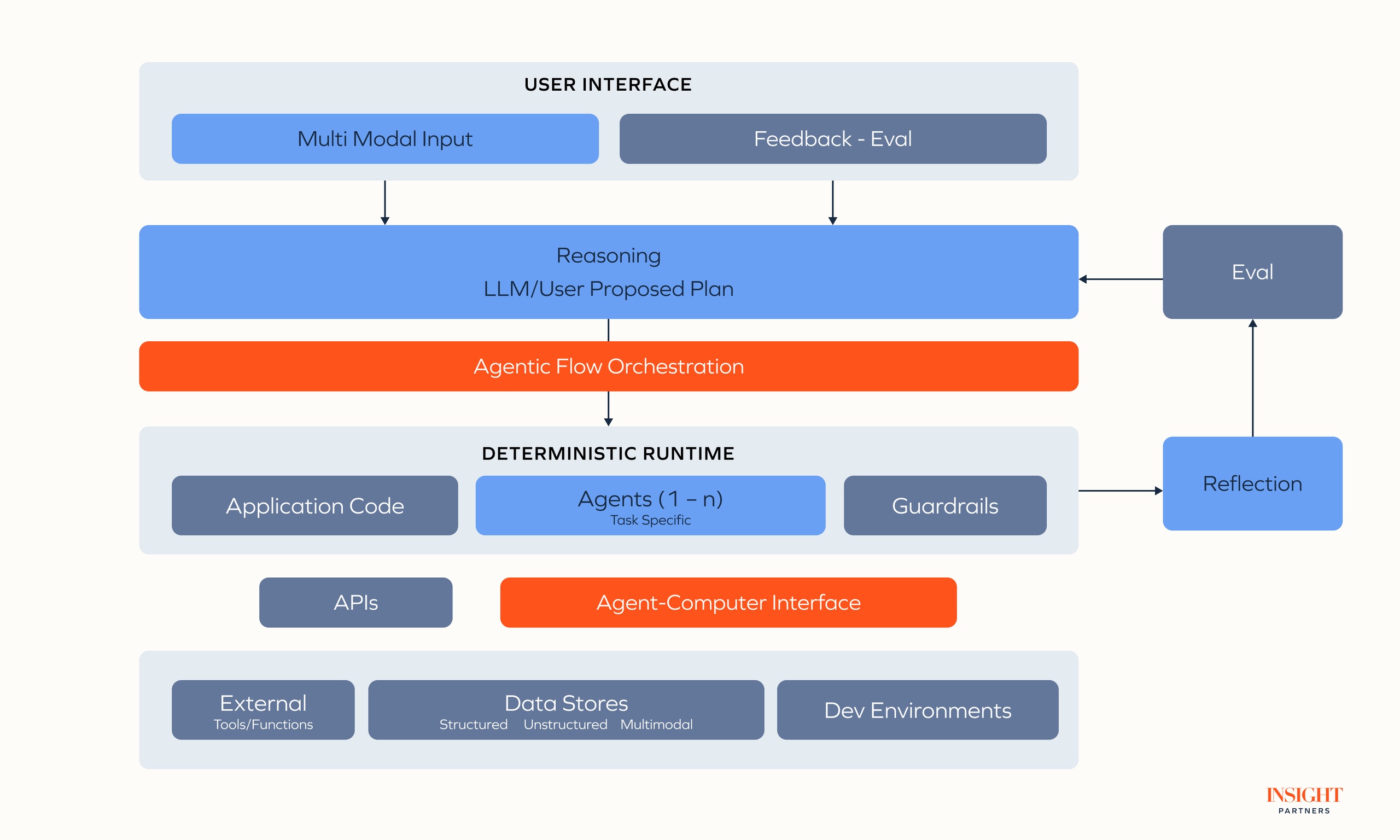

Reference architecture of an enterprise Agent

Deterministic Agent performance requires good system engineering with robust integrations and clean interfaces.

Some key building blocks of agentic architectures are as follows:

Data retrieval

There is a diverse combination of options for builders to add context to Agents based on the use case.

- Retrieval Augmented Generation (RAG): Grounds LLM responses by retrieving and integrating knowledge from private and public datasets. LLMs are sensitive to input variations and require consistent data input. Llamaindex, for example, helps build RAG pipelines for simple Agents.

- Memory: With LLMs adding memory along with solutions like Mem0, Agents can refine knowledge in semantic memory with external data, recall actions from episodic memory and accumulate experiences in long-term memory to provide adaptability and personalization.

- Long context: Growing window size in LLMs enables better contextual awareness and multi-step reasoning by tracking each step without truncation. It will also help with long-horizon tasks and multi-modal inputs. RAG versus long context windows continues to be an active debate.

Agent computer interfaces

A force multiplier for Agent design, connecting everything from diverse data sources to external resources and tools to simplify design while improving its abilities.

- Function/tool calling: LLMs can natively make structured requests such as API calls, database queries, file manipulation, and invoke tools like web search or executing code. As API services are expressed as Agents (Stripe Payments), these will be incorporated into agentic workflows.

- Computer use: This is an exciting function for LLMs to execute commands in a computing environment using human interfaces. With limited action space (Bash cmds, File ops), this is very suitable for quick implementations. For complex and ambiguous tasks needing precise execution, optimal token use might drive an API-based approach for scale and consistency.

- Integrations: Integrations define an Agent’s capability. Connecting to relevant data sources and applications is often the first step in the deployment of Agents in production. While this requires code and API integrations such as those offered by Workato, a new class of middleware such as Langchain and Paragon are also emerging, along with LLMs themselves offering this capability.

Performance

- Evaluations: A key learning from the self-driving Waymo Driver platform has been the importance of rigorous evaluation and feedback loops. Agentic architectures must develop a robust evaluation loop that incorporates step-by-step tests and validation criteria combined with active user feedback. A best practice is using an end-to-end Reflection loop, where the LLM that is constantly trained on feedback from the evaluations is used to test Agent output for accuracy. Defining evaluations for real-world use cases is tough; breaking the criteria into intermediate steps, logging error messages, and getting user feedback for training can help with the definition. Braintrust and Honeyhive offer good solutions to complement DIY approaches.

- Guardrails: Guardrails, such as the one from AWS, define bounds within which Agents can function and help to enforce company policy and access permissions are enforced as part of the execution flow.

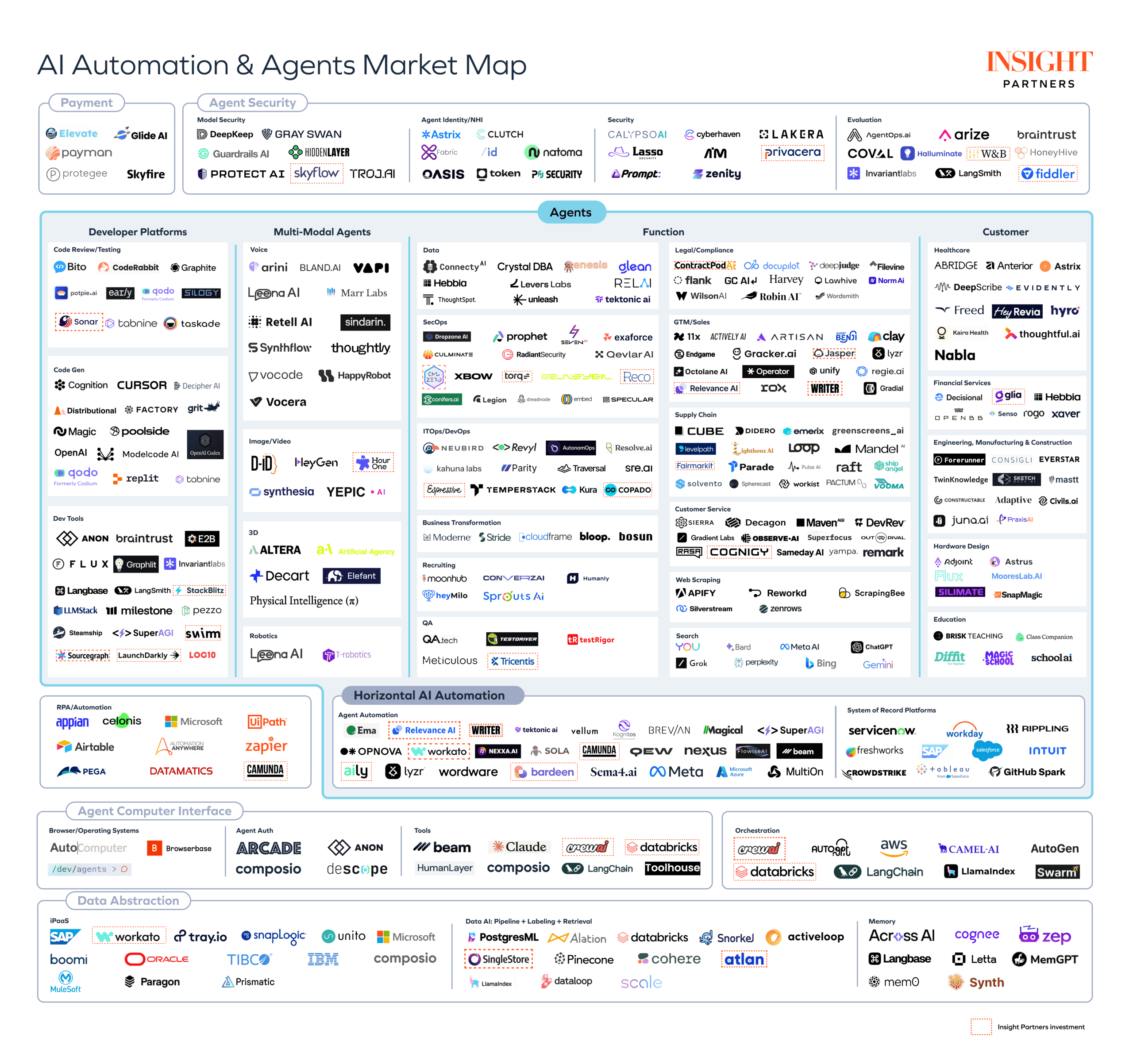

Agent types

A rich ecosystem of AI Agents is emerging; a few prominent archetypes include:

- Vertical Agents: Focused on bounded and well-defined tasks that represent a normalized workflow across a company. They combine AI capabilities with deep functional knowledge to address high-volume use cases like Relevance for GTM, Harvey and Flank for Legal, and Sierra for customer service. From a pricing perspective, these Agents fit well into a “Hire-an-Agent” model or an outcome-based pricing model where there can be direct attribution of value.

- Horizontal agentic platforms: These platforms integrate common data sources, tools, UX, and guardrails to build Agents for different workflows and user communities across a company. Examples range from Cohere enterprise platform to traditional RPA companies retrofitting agentic solutions. Given the complexity, some companies are using a consultative approach with users-in-the-loop to optimize success rates. Some sophisticated enterprises are taking a build approach leveraging the rich set of Agent tools. In the SMB/knowledge worker space, startups like Bardeen are targeting simpler use cases using no-code platforms and a rapidly increasing library of reusable Agents across users.

- Multimodal Agents: While multimodality could be a tool in one of the above Agents, there is an evolving class of Agents focused on multimodal workflows. Use cases here range from video manipulation, video generation, and multilingual chatbots with solutions such as Director framework for video or ElevenLabs and Cognigy’s voice Agents. As these powerful new multimodal models launch, User interfaces will tend toward natural human interfaces of voice and images.

- Agentic interfaces for classical SaaS: Incumbent software platforms are adding Agent overlays to capture a large percentage of the workflows that natively happen in the underlying system of record (Agentforce). These Agents are an efficient value unlock for users invested in these platforms.

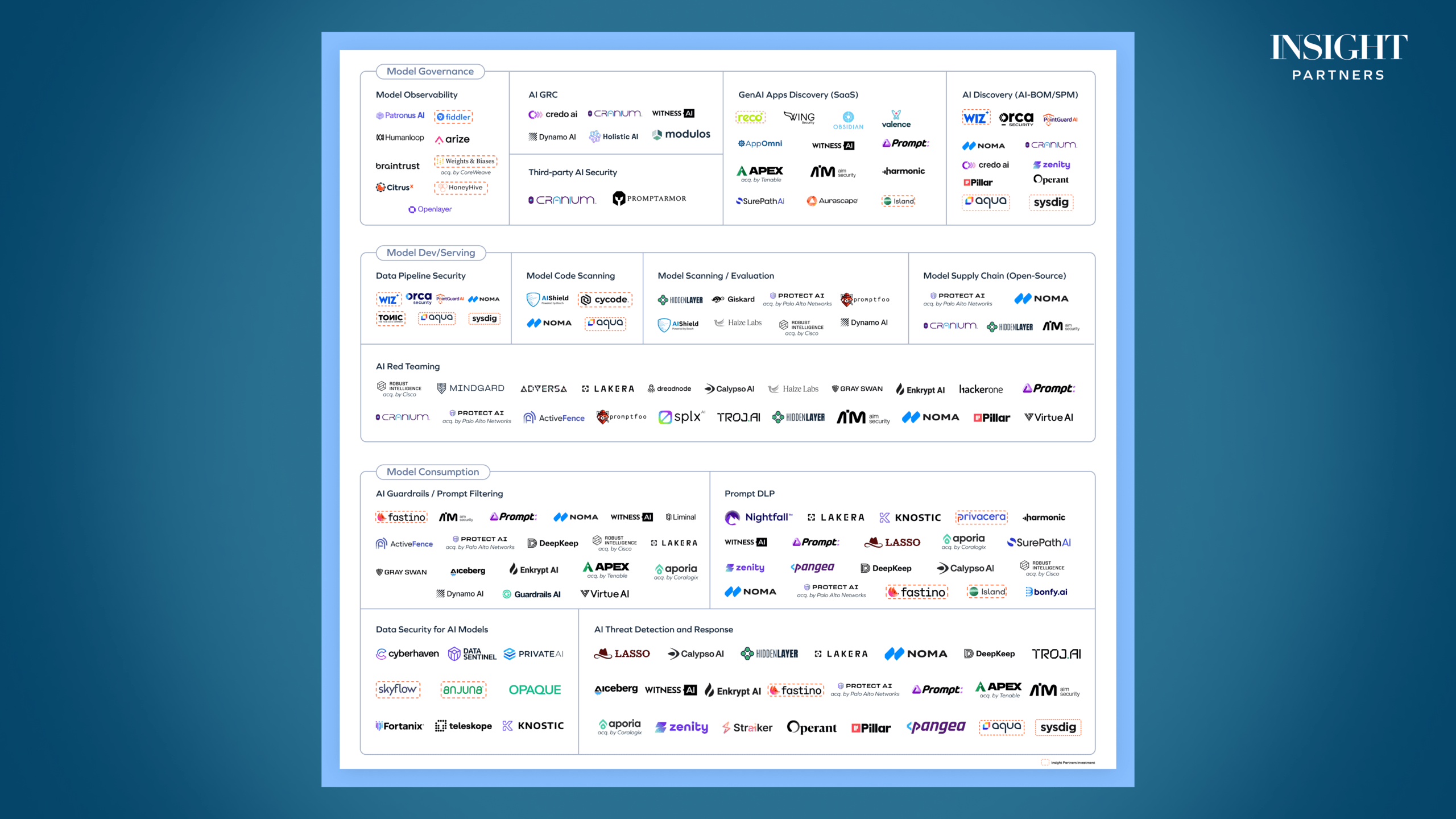

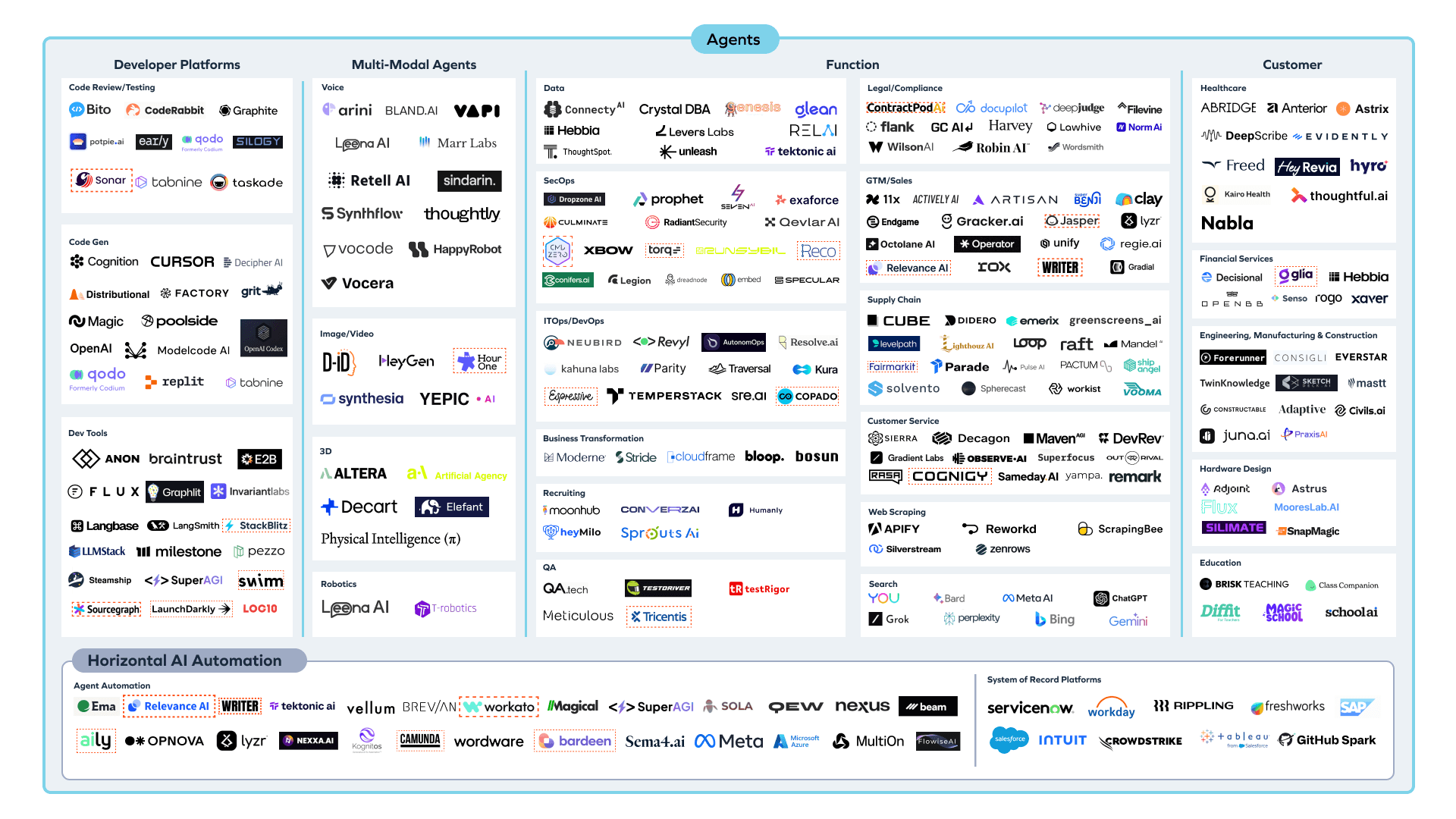

2. AI Agents market map

There are two key areas represented in the map where we see opportunity going forward.

Infrastructure

Scaffolding and infrastructure must be built for Agents to be deployed reliably in production. While the categories displayed above will shift and evolve over time, we are excited by builders who are enabling and improving the infrastructure for agentic deployment.

Verticalized platforms

We see an opportunity for verticalized functional Agents that can be stitched together with complex workflows targeting a specific industry or function. We are particularly interested in verticals where there are highly technical and complex tasks, unique data sources, and where the incumbent is struggling to keep pace with innovation. We believe that if companies target these vertical markets with pre-built agentic workflows, enterprises will likely buy from external vendors versus rebuilding it themselves.

There are at least two remaining questions unanswered by the state of the market today:

- Where do incumbents have the advantage and where should application layer startups be focusing their efforts?

- What parts of the infrastructure stack will be subsumed by the model providers? Where else will there be infrastructure consolidation?

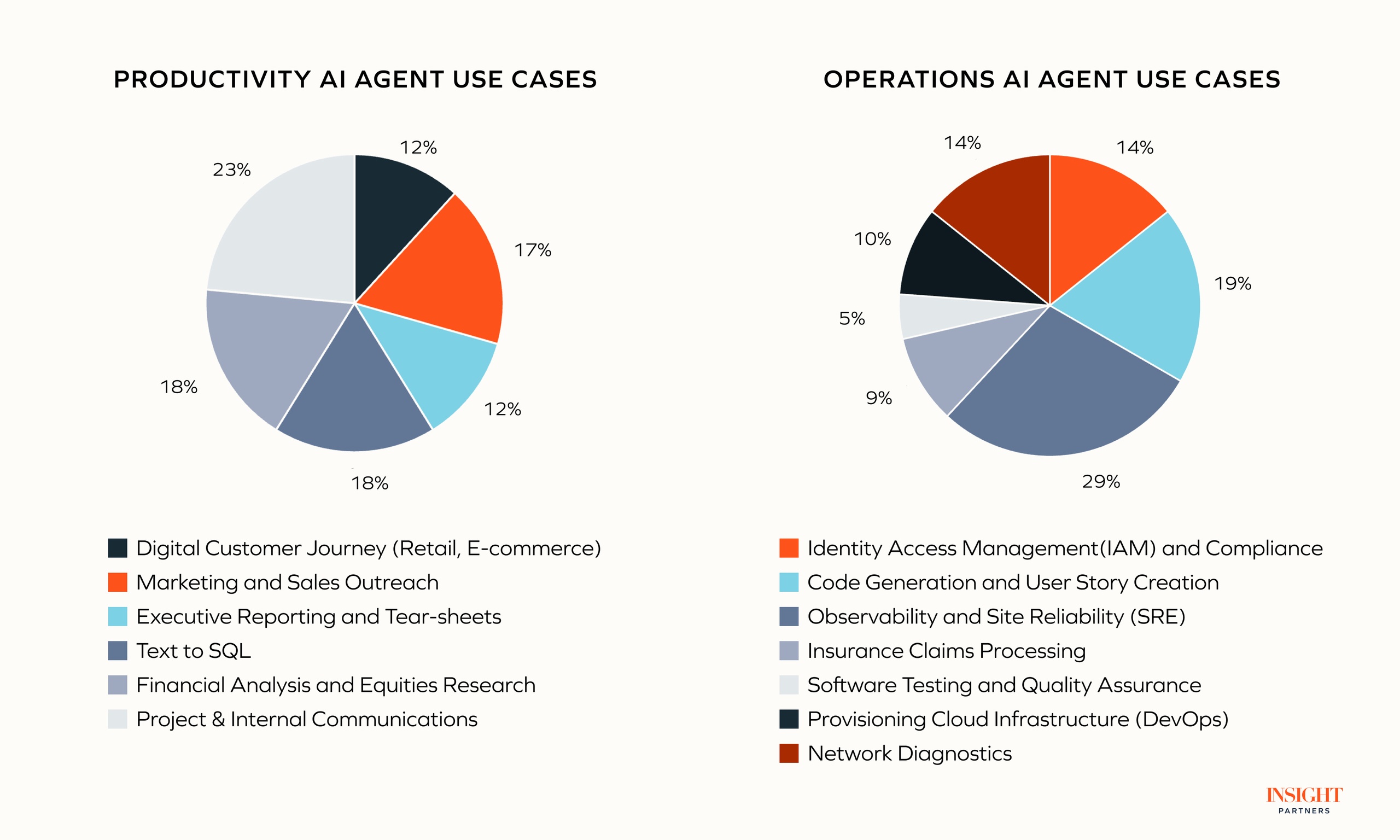

3.1 Agents in the wild: Real-world case studies of Agents in the enterprise

Navigating AI Agent use cases in production

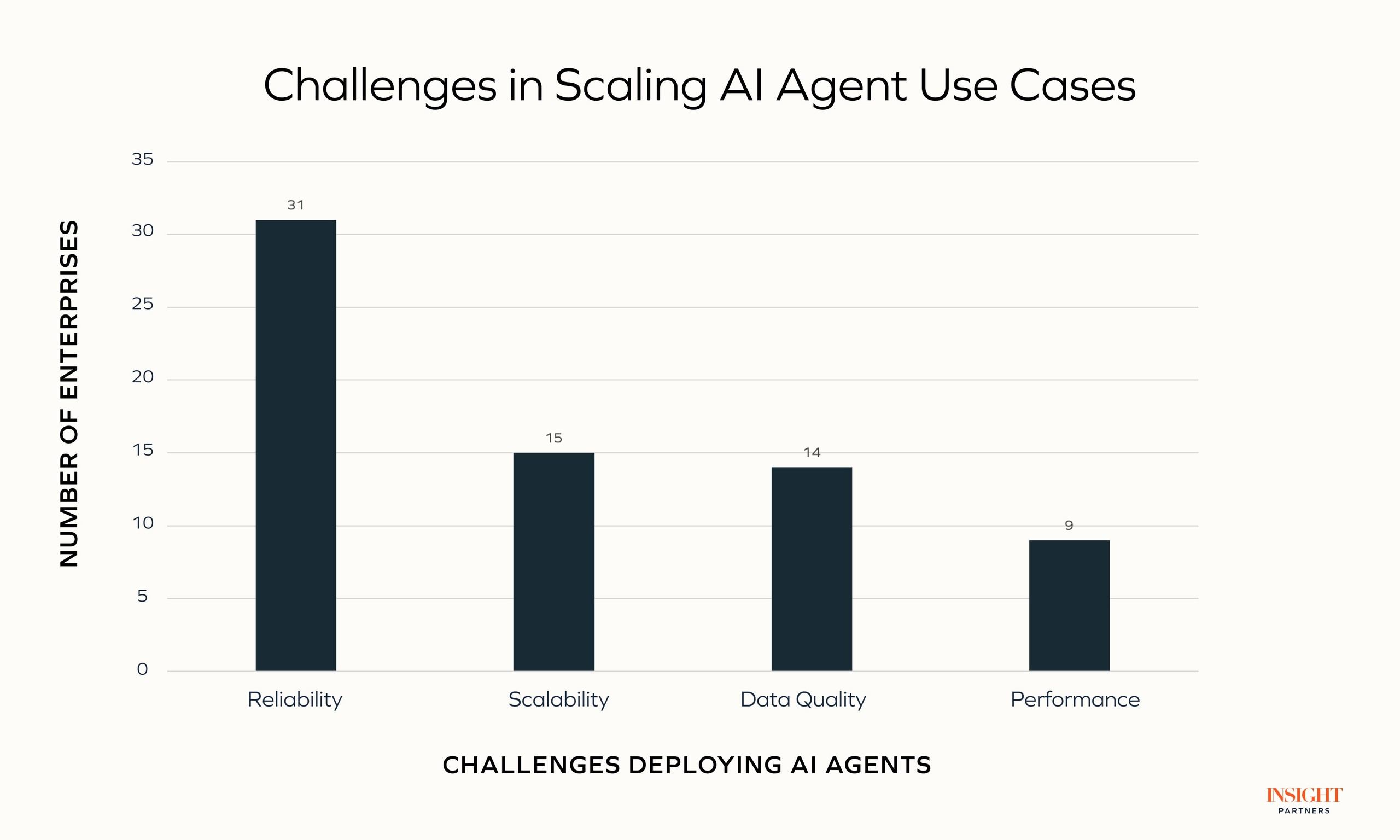

Over the past year, many agentic applications have moved from concept to reality, with varying maturity across industries. Insights from surveying 105 Fortune 500 companies reveal stages of deployment, from rules-based Agents handling tasks like summarization and approvals, to advanced Multi-Agent systems tackling tax planning, supply chain optimization, and fund management and are reflected in examples in sections 3 and 4 in this article.

In this article, our goal is not to capture high-level categories of AI Agents but to dissect real-world detailed use cases deployed in some of the world’s largest enterprises. We break down these use cases into three categories: buy externally, experiment, and production.

Developer-facing (SDLC)

AI Agents are augmenting workflows across the Software Development Lifecycle (SDLC), including code generation, end-to-end testing, provisioning infrastructure, approvals, compliance, and even observability.

- Identity Access Management (Production): An asset manager uses AI Agents to automate complex IAM and IaC workflows, including infrastructure provisioning and access approvals. Task-planning Agents coordinate across cloud environments (AWS, Microsoft, on-premises), enabling developers to focus on high-priority coding and saving hours on repetitive tasks.

- Automated testing and provisioning (Production): A global holdings corporation leverages AI Agents to automate user story creation, test case generation, and infrastructure tasks like setting up VMs and managing deployments via Terraform. This reduces DevOps, TPM, and SRE overhead, freeing teams to focus on complex development challenges.

- Code generation and compliance (Production): A large bank employs AI Agents for code commenting, unit testing, regulatory compliance, and validating data models, minimizing errors while prioritizing quality and reducing manual workload across its software lifecycle.

- AI for software development at AT&T (Production): AT&T uses autonomous Agents to streamline software development by automating user story creation, coding, and test execution. A “master Agent” oversees task-specific Agents, allowing developers to tackle complex issues while the AI handles routine operations.

Customer experience

AI Agents in customer experience can transform brand interactions by delivering personalized, seamless journeys and improving service quality across channels.

- Retail advisor training (Experiment): A beauty retailer uses an AI Agent as a virtual beauty advisor to onboard and train store associates. Drawing on chatbot interactions from the COVID-19 era, the Agent builds a knowledge platform to enhance product knowledge and customer service as part of a three-year program.

- Product discovery and assistance (Experiment): A retail giant deploys AI Agents across the customer journey, from product discovery to post-purchase support. Category-specific Agents for hair care and skincare provide tailored assistance via a natural language web interface, integrating in-store and online customer context.

- Gift finder experience (Experiment): For the holiday season, a retailer pilots an AI Agent offering personalized gift recommendations. The Agent analyzes prior customer interactions, product catalogs, and customer preferences, including skin characteristics, to suggest ideal products.

- Customer service AI Agent (Buy): A customer service solutions provider uses AI Agents to assist human Agents by automating repetitive tasks, suggesting responses, and streamlining workflows. This builds on call-center AI use cases, leveraging agentic workflows for real-time approvals, document completion, and data gathering.

- Insurance loan AI sales Agent (Buy): A leading EU bank employs outbound voice AI Agents to increase conversions for loan insurance products. The Agent proactively calls customers who abandoned transactions, using a natural voice and personalized insights to re-engage and convert them.

Backoffice (Finance, Procurement)

AI Agents streamline back-office operations by automating tasks like inventory monitoring, invoicing, and research. Thus, they reduce errors and workloads and improve decision-making with data-driven insights.

- Supply chain inventory and triage (Production): A consumer goods company uses AI Agents to monitor stock levels, trigger replenishment orders, and address availability issues, preventing stockouts and ensuring product availability.

- Automated invoicing (Production): The same consumer goods company employs AI-driven invoicing Agents that streamline invoice processing, enhancing accuracy, and reducing manual tasks in finance.

- Research and document analysis (Production): An investment bank uses AI Agents for research tasks such as reading unstructured documents, summarizing content, and mapping company names to tickers for investment analysis.

- Stock behavior forecasting (Experiment): A financial exchange leverages AI to predict stock behavior based on historical data and real-time trends, providing insights for trading decisions.

- Financial auditing (Buy): KPMG has deployed teams of AI Agents that autonomously plan, coordinate, and execute actions under human supervision for audit, tax, and advisory workflows.

Data analysis

AI Agents can enhance data-intensive workflows by managing, analyzing, and ensuring data quality, enabling reliable insights with minimal human effort.

- Cloud usage analysis (Experiment): An industrial solutions firm’s cloud data AI Agent detects anomalies and suggests cost-saving optimizations. Their AI Agent can interface between platforms like AWS Cloudwatch, Azure, and Google Cloud’s FinOps platforms, ingest structured data, and identify abnormalities around cloud usage.

- Text-to-SQL and data analysis (Production): A global telecom company utilizes a Multi-Agent system to interpret natural language queries to convert text to SQL. The system determines the relevant database, identifies the appropriate tables and columns, generates the SQL query, and employs a reflection Agent to verify and refine the output.

- Compliance-driven data querying (Production): A financial services firm’s AI Agents perform data queries and compliance checks, ensuring internal data quality control and compliance adherence without exposing client data directly.

Operations

From document summarization to network diagnostics and workflow management in clinical settings, these Agents speed up critical processes and ensure quality control. Their ability to generate comprehensive reports and insights can help executives and employees make quicker, more informed decisions.

- Automated network diagnostics (Production): A global telecom employs an AI Agent to identify and resolve network issues, reducing manual diagnostics time. The Agent analyzes complex logs and structured data tables to detect abnormalities and alert the appropriate security or development team.

- Executive reporting and knowledge retrieval (Production): A second investment bank leverages AI Agents to produce monthly executive briefing reports. Functional Agents collect business metrics company-wide, while a drafting Agent compiles and finalizes the CEO’s tear sheet for enhanced decision-making.

Some AI Agents are making a tangible impact in Fortune 500 enterprises, automating tasks across customer service, finance, and operations to deliver speed, accuracy, and cost efficiency. From streamlining supply chains and diagnostics to personalizing customer experiences and slashing time-to-market in development, these Agents can drive productivity and ROI while freeing teams for strategic work. Now, on to the challenges of scaling these game-changing systems.

3.2 Short-term challenges and best practices in deploying agentic AI

Compliance challenges in data storage and processing

Compliance poses significant hurdles in deploying AI Agents, especially in data-sensitive industries. Companies must navigate data sovereignty laws, data governance, and healthcare regulations to ensure their AI applications are legally compliant while maintaining effectiveness:

- Data sovereignty laws: An EU telecom uses region-specific models with open-source customer data to meet local regulations, sacrificing some accuracy and access to advanced partner LLMs.

- Data governance and PII handling: A global customer service company implements strict protocols to anonymize PII in RAG systems; some organizations model frameworks on the EU AI Act to categorize risks and streamline approvals.

- Healthcare compliance: A beauty brand avoids prescriptive language in AI skincare recommendations to prevent medical implications.

Importance of data preparation and documentation

High-quality, well-documented data may prove essential for AI success, yet many organizations struggle with consistency. Companies are investing in data standardization and curation to enhance AI accuracy and adaptability across various industries.

- Data standardization efforts: A top financial institution improved AI accuracy from 80% by standardizing undocumented rules and investing in the modern data stack, including metadata-driven data catalogs and lineage tools.

- Adaptable AI models: A Fortune 500 consumer goods company is developing modular AI Agents for domain-specific supply chain tasks; a Big 4 audit firm focuses on balancing client-specific needs with flexible models.

- Dedicated data curation roles: Organizations are establishing dedicated data curation positions to drive high-quality inputs for AI systems.

Driving reliable AI outputs through auditing and error management

Reliability of AI outputs is critical, especially in client-facing roles where errors can have significant consequences. Companies are implementing strategies like traceability, human oversight, and real-time monitoring to mitigate risks such as hallucinations.

- Traceability with citations: A manufacturing company requires AI recommendations to include citations as well as enhance output reliability and traceability.

- Human-in-the-loop oversight: Financial institutions introduce validation layers and human review for high-risk AI outputs to ensure accuracy.

- Real-time monitoring tools: The use of real-time observability tools and reflection Agents can help detect and address issues promptly.

Educating enterprises and measuring ROI

Calculating the ROI for AI Agents is complex but essential for justifying their deployment. Companies are developing frameworks to compare AI performance with human benchmarks and link AI tasks to tangible financial outcomes.

- Performance comparison studies: An asset management firm found AI matched 80% human accuracy but achieved higher volumes, leading to the recalibration of productivity metrics.

- Financial outcome frameworks: An EU bank developed a system connecting AI tasks to productivity gains, cost savings, and revenue generation.

- Recalibrating productivity metrics: Organizations adjust traditional measurements to account for AI capabilities and efficiencies.

Overcoming cultural resistance to AI adoption

Cultural resistance remains a barrier to AI adoption, especially among employees fearing job loss. Companies address these concerns through transparent communication and by framing AI as a tool that enhances productivity rather than replacing jobs.

- Transparent communication strategies: A financial services provider emphasizes AI as a supportive tool to alleviate employee fears.

- Integration via multidisciplinary teams: Incorporating AI into workflows through cross-functional teams can help reframe AI’s role as a productivity enhancer.

- Employee engagement initiatives: Engaging staff in the AI implementation process builds trust and acceptance.

Prioritizing practical AI applications over hype

Amid AI hype, companies can focus on practical evaluation frameworks to assess real-world performance. This approach drives AI implementations to address operational needs effectively.

- Practical evaluation platforms: A Fortune 500 financial firm created a platform to test AI models on cost, accuracy, and efficiency in real workflows.

- Operational needs prioritization: Emphasizing AI solutions that meet specific operational requirements over generalized capabilities.

- Performance-based selection: Using tangible metrics to select AI applications that deliver practical benefits.

3.3 Decision considerations for build versus buy

Time-to-market and commoditization

Organizations often avoid investing in commoditized tools, focusing instead on initiatives that offer a competitive advantage. With agentic AI still emerging and limited vendor options available, companies must carefully decide between building internally or procuring externally. Firms like Fortune 500 telecom leaders have found that procuring existing technology can be faster and more efficient, but the scarcity of agentic AI vendors complicates this decision.

Use-case complexity and internal capabilities

The complexity of AI use cases and the organization’s internal expertise may heavily influence the strategy to build or buy. Companies with robust engineering teams may adopt a “build first” approach to understand requirements, while those with leaner teams might rely on external solutions to meet time-to-market demands.

- “Build first” approach: A multinational telecom provider builds internally to grasp baseline needs before considering vendors.

- Financial services lead in internal capabilities: This industry often has mature data stacks and robust data science teams supporting agentic applications.

Data privacy and industry-specific needs

Data privacy and compliance are critical in regulated industries, influencing the decision to build internally or partner externally. Financial services tend to favor internal builds to reduce risk, while less regulated sectors like retail may be more open to partnerships.

- Internal builds for compliance: A leading financial institution hosts proprietary models securely, using a “bring your own model” strategy with vendors.

- Balancing speed and privacy: Retail companies we surveyed collaborate with external providers, weighing flexibility against privacy considerations.

- Industry-specific approaches: Regulated industries such as financial services and insurance prioritize data security, affecting their build-versus-buy decisions.

While organizations may initially prefer building their own agentic AI solutions, factors like industry regulations, data privacy, and time-to-market pressures often dictate whether they choose to partner or purchase externally. As agentic AI tools mature, buying may become more feasible, but currently, the decision remains nuanced and specific to each industry’s needs.

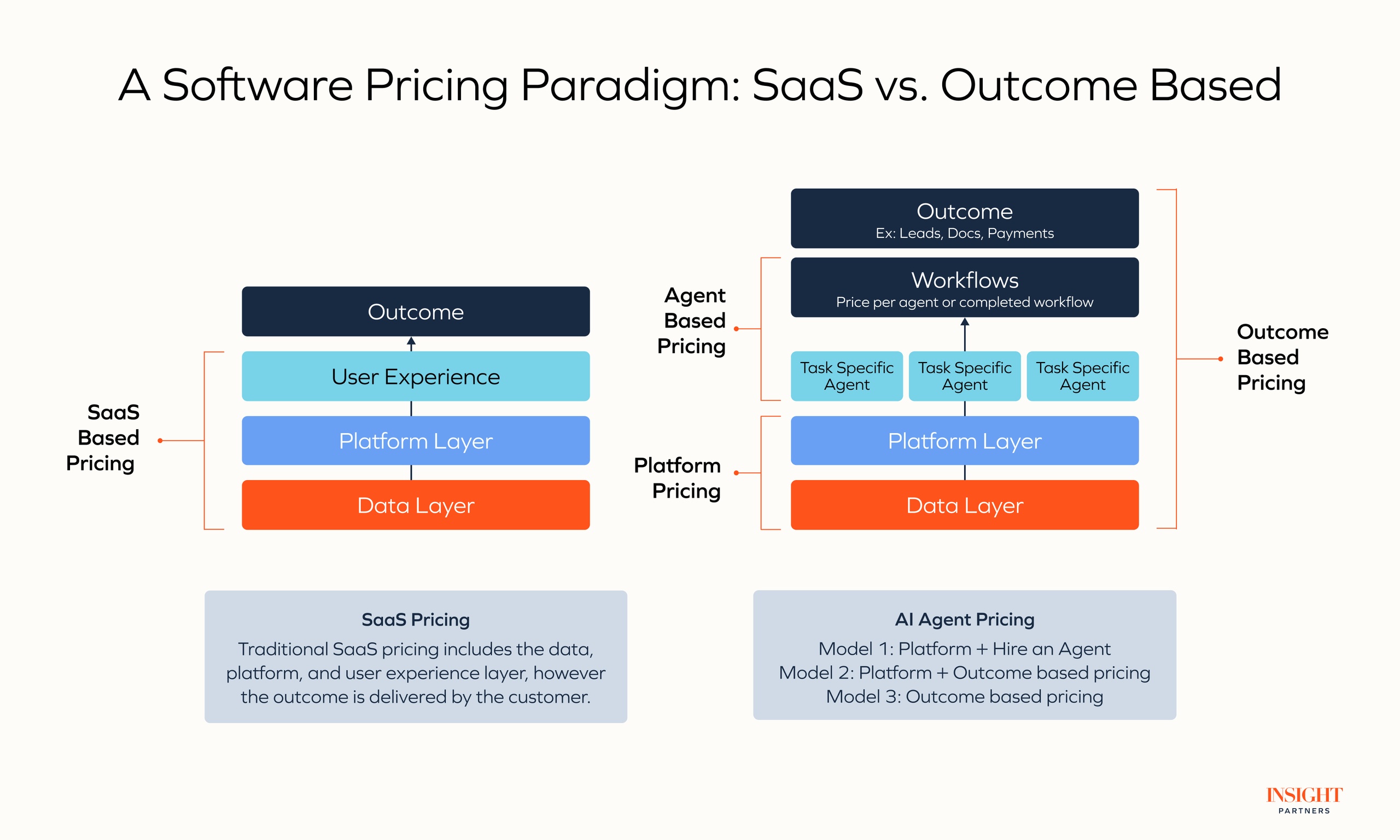

4. The economics of Agents

In the early 2000s, Salesforce transformed software pricing with subscription-based SaaS models, replacing rigid licenses with scalable, recurring billing tied to customer success. By the 2010s, mobile computing and product-led growth (PLG) ushered in usage-based pricing, exemplified by Snowflake’s data storage-based model. Now, the AI Agent era is shifting toward outcome-based pricing, aligning value with measurable results.

Emerging pricing models for AI Agents

Conversations with executives and founders reveal three key pricing models shaping the future of AI Agents:

- Platform + hire an Agent: A base platform fee plus a usage-based fee for “hiring” an AI Agent to complete workflows, similar to a full-time employee. Example: Cognition’s Devin charges per AI software developer, with a base fee and overage fees for exceeding work unit limits.

- Platform + outcome-based pricing: A hybrid model combining a platform fee with charges tied to achieving specific outcomes. Example: An AI Agent for call centers charges a base fee plus an outcome-based fee for onboarding customers via outbound calls.

- Pure outcome-based pricing: Enterprises pay solely for achieving defined business outcomes. Ideal for high-volume use cases like sales development, content generation, or procurement that replace significant OpEx.

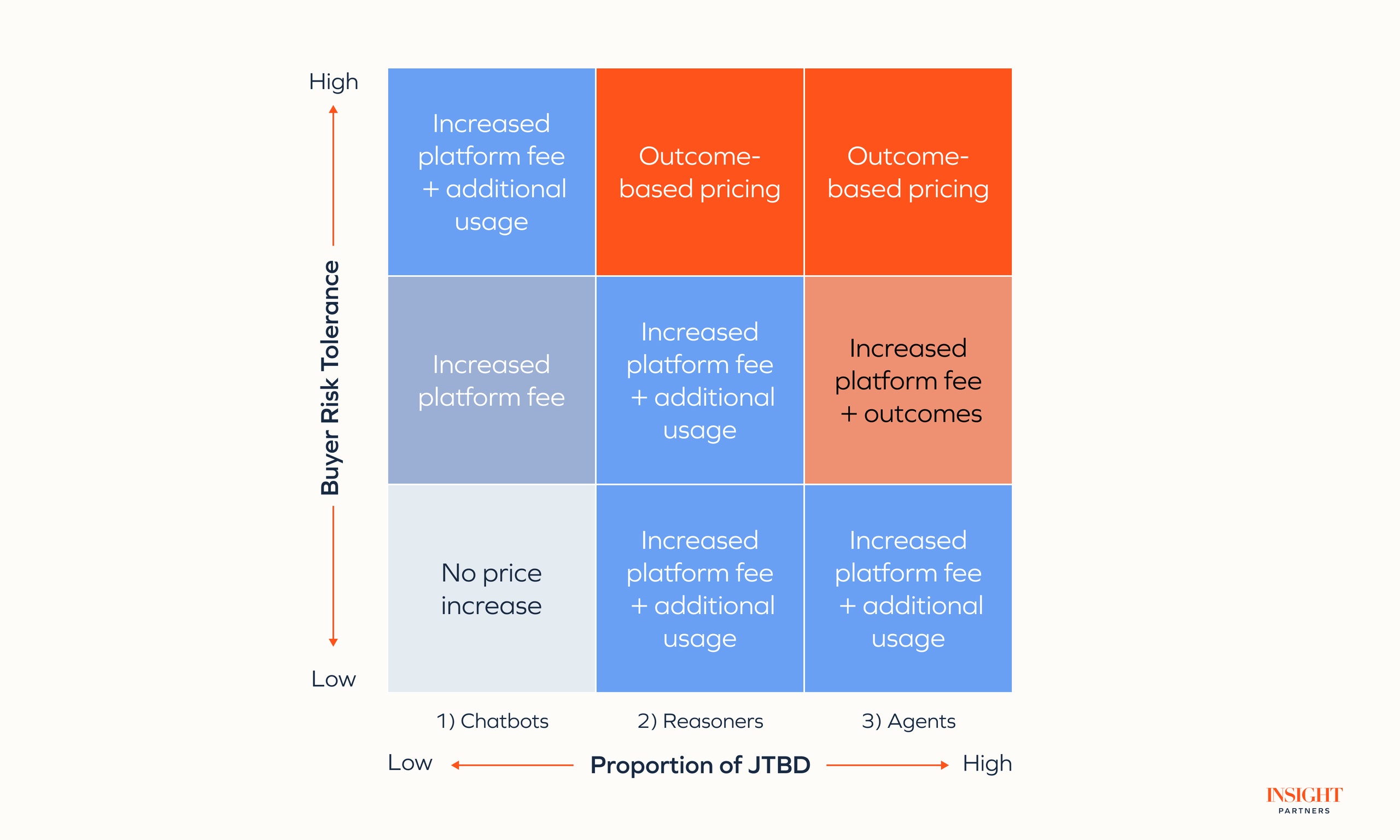

Barriers for startups

Startups may face significant challenges in monetizing AI Agents, including defining value, pricing strategies, and building scalable infrastructure. It’s often crucial for founders to consider the proportion of jobs-to-be-done (JTBD) delivered by the AI Agent in comparison with their buyer risk tolerance when determining AI Agent pricing models.

- Immature value delivery: Some AI Agents perform only simple or partial tasks, limiting their perceived value and monetization potential.

- Pricing model uncertainty: Undefined value delivery complicates pricing strategies and reduces flexibility.

- Ambitious pricing: Limited delivered value and customer skepticism cap acceptable pricing levels, with high prices deterring adoption.

- Incomplete systems: Startups often lack infrastructure for metering, rating, and monetizing usage at scale, requiring investment in scalable solutions.

Barriers for enterprises

Enterprises face notable challenges in adopting and budgeting for AI Agents.

- Unclear ROI: Some buyers are skeptical about the measurable return on AI Agents, hindering commitment.

- Complex pricing models: Validating outcomes and unfamiliar metrics like executions or prompts create uncertainty for procurement teams.

- Cost predictability: CFOs resist variable pricing due to difficulties in forecasting costs and adapting to novel models.

- Procurement shifts: AI pricing involves early engagement with P&L leaders to align procurement with business outcomes.

- Security and compliance: Risks tied to AI Agents’ slow adoption, especially in regulated industries.

Insight’s best practices for pricing

Regardless of whether your ideal price model is outcome-based, usage-based, or a hybrid model, a phased approach over the next 12 to 18 months is important for long-term revenue growth. Founders and enterprises should prioritize discovery phases to define ROI and quantify outcomes with customers to achieve adoption.

Starting with including limited usage in existing plans with a (reasonably) higher platform fee avoids adding complexity to an already complex purchase decision. Shifting a higher proportion of the total price to usage or outcomes over time should be considerably easier once you have delivered value.

It’s crucial to consider the role that increased inference costs, driven by the adoption of your AI agents, could play in your overall pricing model. Investment in infrastructure to track outcomes and integrate metrics into billing systems is critical, and another reason shifting too quickly to usage or outcome-based is risky. Engaging P&L leaders early helps align with enterprise KPIs.

For instance, one Insight portfolio company is dedicating 2025 to prioritizing the adoption of their Agents and defining high-value use cases before introducing a credit model to scale monetization, while another is piloting outcome-based pricing for AI Agents handling outbound insurance calls.

5. Considerations for enterprises

“If the solution exists in the market, buy it.”

Enterprises should consider a build versus buy framework. Is their use case intended to be built on top of internal data sets that are proprietary or customized to their workflow? If so, it might be better to build an agentic tool in-house. If the tool could become a competitive advantage that the enterprise can release to their external customers, it might also be worth the investment to build internally.

On the other hand, if the core capability of the agentic workflow is a commodity or already provided by a software vendor, purchasing makes more sense. Andrew Ng put it succinctly at our ScaleUp:AI ’24 conference: “If the solution exists in the market, buy it.”

Start with internal use cases

An enterprise could have several different use cases for agentic workflows, and it might be easier to start with internal facing use cases and then create external/customer-facing use cases as workflows become more deterministic, guardrails are put in place, and employee confidence in agentic workflows has been built. Focus on using AI where automation or streamlined workflows would actually drive value — Agents can have a much bigger impact depending on the application they sit in.

Measuring outputs to track ROI

Tracking the ROI of Agents is hard, so aligning on ROI metrics ahead of trialing an Agent is key. Creating good evaluation methods to measure the output of the Agent and then refine and finetune the Agent is critical.

Supplemental scaffolding

Consider supplementing your agentic workflow with scaffolding that can plug potential gaps and holes in production. Agent orchestration layers can provide a framework for multiple Agents working together, enabling an enterprise to uplevel the complexity of their use case. A PII reduction tool such as Tonic.ai can be critical if using customer or individual-level data.

The management of hallucinations is crucial to focus on and track. Enterprises can often get more comfortable with a human-in-the-loop model to provide quality assurance and prevent errors, especially if the workflow is customer or client-facing.

Successful cultural integration

As with any major tech change, cultural and change management is a necessary component of executing Agents in production. Fear of job displacement and resistance to agentic workflows can hinder adoption. Successful implementation may require a change management plan to integrate Agents without disrupting existing workflows and can start with including a user-in-the-loop to get up and running with higher confidence.

6. Considerations for builders

Both software incumbents and new AI-native startups can build Agent workflows to sell to enterprises and SMBs alike. The latter has an advantage in building and shipping these agentic products due to the lack of tech debt in an existing product, but we’ve already seen many large platforms release agentic features.

Balancing scope with output consistency

Builders can consider creating specialized Agents customized with an SLM, a finetuned LLM, or offering a generalized Agent. The key is to balance scope with consistency of output. If not building a specialized Agent, builders should think about what advantage they have over other generalized Agents. Can they build more extensive workflows around that generalized Agent into specific products or applications?

The critical point really is to think hard about the value-add an Agent provides versus the model and middleware. With model capabilities expected to focus on agentic workflows in 2025, builders really need to start with a deep understanding of an existing workflow and figure out how they reimagine it using an agentic architecture.

Consider a consultative sales approach

Often, the fastest way for AI Agent builders to deliver value for end users is to consider a consultative sales approach, where builders spend time deeply understanding the user workflow and iterating with them on fine-tuning the Agent output and performance. Nearly every successful AI startup has this in their playbook; we are past the days of lobbing the software over the fence and just collecting ARR.

Competing with incumbents

Incumbents are racing to keep pace with innovation in AI Agents. These incumbents have the advantages of enterprise familiarity, completed procurement processes, and data moats. Consider focusing on categories where the incumbent is less popular or is becoming a “legacy” product.

Adaptability with Agents

Ideally, builders will create modular and extensible Agent ecosystems that can adapt to different environments, applications, and workflows that their end customers might be using.

Selling to enterprise

If trying to sell to enterprises, builders must ship with a maniacal focus on the scaffolding around Agents that enterprises need, including data governance, identity and access management, operational visibility, explainability, and auditability.

Multi-agentic approaches

Multi-agent approaches allow builders the ability to not only leverage the “scaffolding” of tools and functions but also the “Services Agents” like a Salesforce Agent, a ServiceNow Agent, or Stripe’s Payment Agent to create an application use case. These service Agents are way beyond an API and could create very interesting combinations.

Pricing models and customer perception

Aligning a pricing model that fits with the perceived value of the product’s customers is crucial — and difficult. Builders should consider a crawl, walk, run approach to outcome-based pricing. Focus on driving adoption, then value, using a proxy of outcomes such as tasks or workflows as the intermediate step.

Be deliberate about who the product is sold to — if builders try to support all use cases for all companies, they might end up supporting no use cases.

If you’re building in this space and want to continue the conversation, please reach out.

Further reading

- State of Enterprise Tech 2024

- AI agents: A new architecture for enterprise automation.

- Beyond bots: How AI agents are driving the next wave of enterprise automation

- From selling access to selling work (and what it means for you)

Editor’s notes:

Some information in this article was sourced through interviews with Insight IGNITE’s Executive Network.

Insight has invested in Workato, CrewAI, Skyflow, Privacera, Relevance, Writer, Bardeen, SourceGraph, Swimm, CMD Zero, Torq, Reco, Espressive, Cognigy, Jasper, Rohirrim, ContractPod, Tricentis, HourOne, Databricks, Glia, Aily Labs, Weights and Biases, Copado, Fiddler. and Fairmarkit.

*Market map sources:

AI SOC Analysts: LLMs find a home in the security org

Beyond Bots: How AI Agents Are Driving the Next Wave of Enterprise Automation

Overhauling logistics with AI: a $79 billion opportunity

The Rise of AI Agent Infrastructure