AI Agents are disrupting automation: Current approaches, market solutions and recommendations

The mainstreaming of AI tools has ignited hope for dramatic productivity improvements for knowledge workers and consumers alike. Transformer-based Large Language Models (LLMs) have demonstrated AI capabilities that are transforming workflows with new automation approaches. In the article below, we trace the automation journey in the age of AI and dig into some of the current and evolving platforms. This article content is based on numerous conversations with researchers, builders, incumbents, enterprise users, and internal conversations within Insight.

Let’s start with a few predictions on how we see the automation space evolving:

- Everyone will have an AI assistant, from consumers to knowledge workers. This will redefine traditional boundaries between vertical applications, automation platforms, and IT services, creating transformational market opportunities for entrepreneurs. AI assistants will take different forms, from copilots for incumbent platforms to applications with embedded AI and various forms of AI Agents.

- Human-in-the-loop is the operative framework for deploying generative AI solutions. Most use cases today are in experimentation or early production with a focus on advisory and assistant-oriented workflows. LLMs can’t yet predictably plan or reason and areas like memory and context are still in research. In Automation platforms where deterministic execution is critical, LLMs are being used for specific tasks at “design time,” not at “run time.”

- Automation is a hard problem and is often underestimated. Incumbents are adding AI to their playbooks and deep experience to improve platform efficiency and UX. State-of-the-art LLM providers are adding Agent modeling, collaboration, and access to tools to enable users to rapidly build AI Agents (GPTs). ScaleUps looking to break through need to deliver differentiated customer value with reimagined workflows grounded in unique datasets and a simple UX.

- Deployment of Automation with AI will take a “Crawl, Walk, Run” approach, starting with simple tasks to more complex workflows. The key is to keep experimenting with Agents, learn where AI capabilities truly add value, and ensure the right “scaffolding” in terms of data, tools, and run-time is part of the Automation architecture. As AI model capability grows, the scale can gradually slide towards leveraging more AI functionality.

- Code generation has emerged as a foundational element in developing genAI-based applications and Agentic Automation platforms. Code has two key attributes that make it an ideal LLM function: It is a form of text, and it has well-defined performance measures. Initial versions of coding copilots are widely deployed today, and we are now seeing full-fledged AI-enabled developer platforms. Code-gen LLMs will play a critical role in Agent architectures.

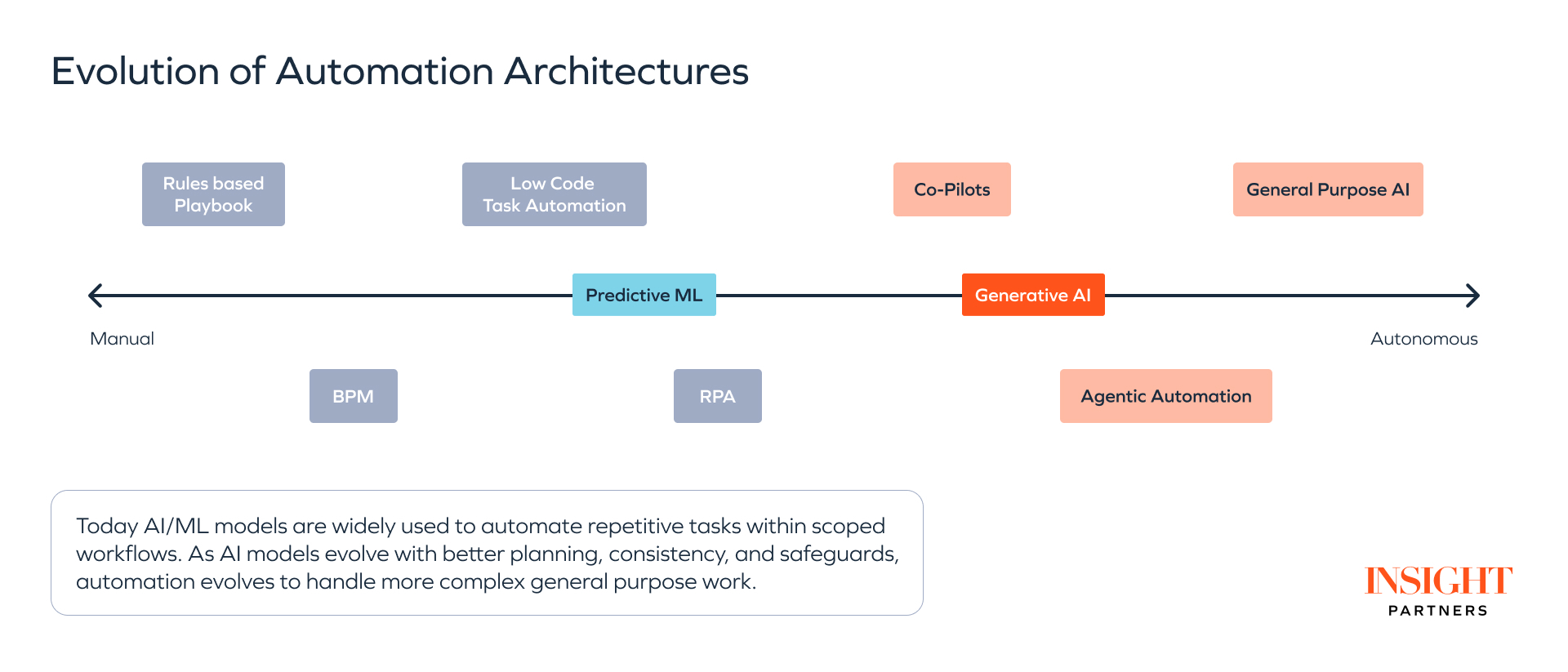

Evolution of Automation platforms

Automation is a constant human endeavor. Every knowledge worker is familiar with the humble “macro” — a shortcut for a repetitive set of commands to add an extra boost of productivity.

Early automation efforts focused on workflows like Quote-to-Cash, Payroll with engineers writing bespoke code, stitching together these workflows governed by static rules and definitions. These brittle early approaches drove development of the first generation of automation platforms, such as:

- Robotic Process Automation (RPA) platforms deliver maximum value in automating repetitive manual tasks. They combine a library of predefined workflows and a low-code/no-code platform to help users build their own playbooks. RPA platforms have progressively incorporated AI/ML models to expand their capabilities.

- iPaaS platforms like Workato started by creating a middleware layer to integrate data, application sources, and APIs to connect different resources. This data layer is a critical input for Automation engines and creating a clean interface is the first step in the Automation journey.

- Low-Code Task Automation platforms offer a predefined set of integrations with a simple UI to automate repetitive tasks for knowledge workers and SMBs.

- Various Vertical Automation approaches focused on specific workflows in domains such as supply chain, IT operations, and developer ecosystem as well as chatbots for customer-facing use cases like help desks and customer service teams.

While these platforms significantly reduced repetitive work, complexity remained in the bootstrapping needed with predefined workflows or consultative rollouts to realize the value of automation. Implementations have also been brittle to operating environment changes in the enterprise.

GenAI has the potential to accelerate this automation journey as incumbents incorporate compelling capabilities into their platforms today, builders experiment with new architectures, and researchers push towards the ultimate goal of an autonomous Artificial General Intelligence (AGI).

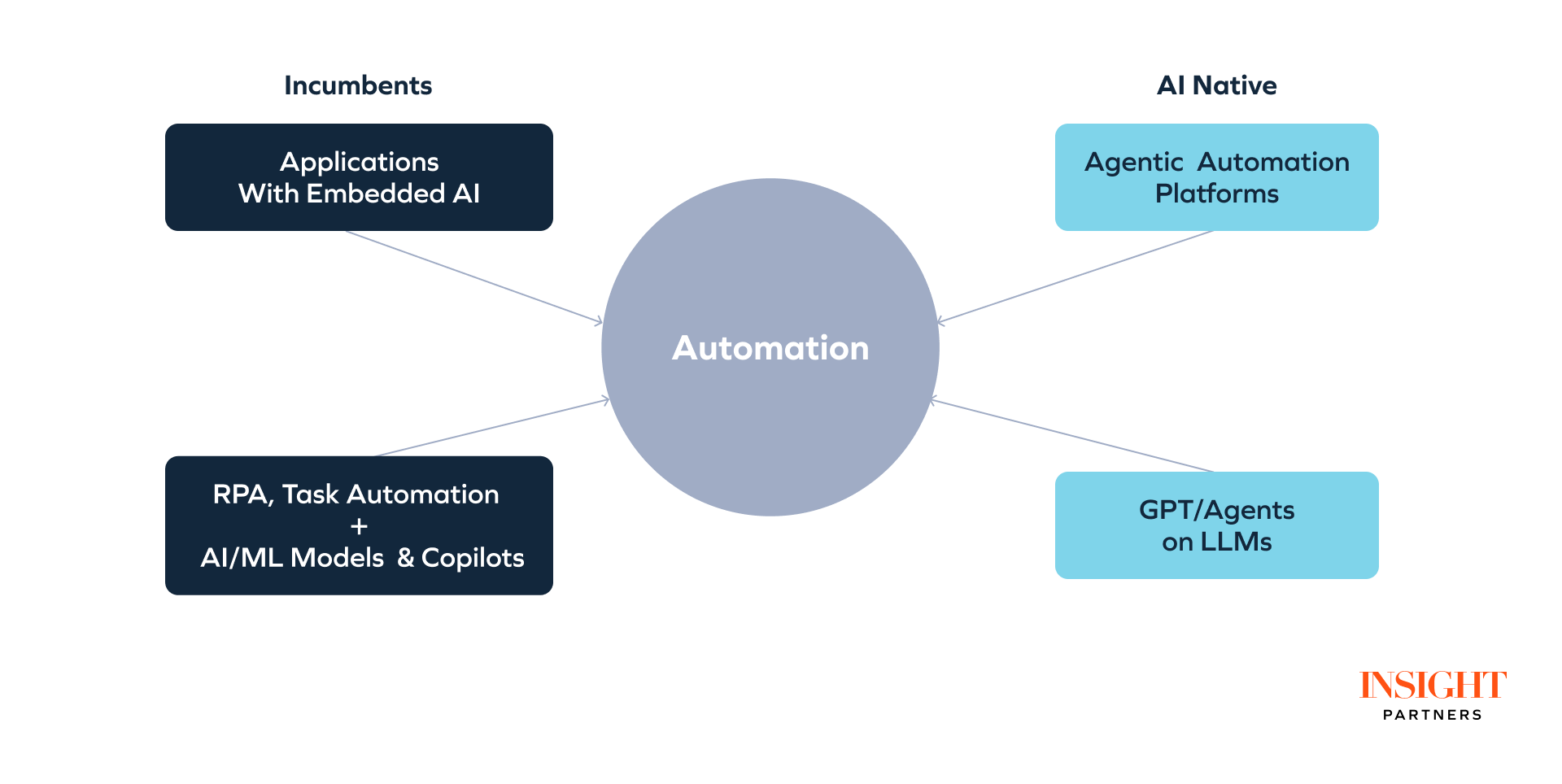

AI in Automation: Different players, distinct approaches

Automation in the enterprise is often a complex task, some practitioners even refer to it as complex orchestration of multiple elements to execute workflows. With the advent of genAI, incumbents and startups/ScaleUps are approaching this opportunity from different angles.

- RPA and Task Automation platforms bring a significant incumbency advantage, with a deep library of automated workflows and experience working with enterprises on complex workflows. GenAI offers an opportunity to address the brittleness and bootstrapping problems with a simplified user experience.

- Application platforms like Microsoft 365 and Notion are embedding AI directly into the platform and user workflow to help complete tasks, offer suggestions, and generate content to assist in the user’s workflow.

AI-native approaches start with an application or workflow and reimagine it from a first-principles perspective. On the application front, a new generation of productivity tools like Swimm and Writer have provided a compelling showcase for genAI transformation of work. Similarly, many vertical applications from sales, marketing, legal, and finance use genAI capabilities to simplify complex workflows.

LLM providers and startups/ScaleUps are taking a fresh approach to automation leveraging Agents to harness genAI capabilities to execute simple workflows. Other approaches connect LLMs with the required “scaffolding” to address complex workflows and applications. Agentic automation today is an area of constant innovation and research as builders experiment with models, architectures, and tools.

RPA and Task automation platforms

Current generations of automation platforms have aggressively embraced newer ML and AI models as part of their platforms. A brief overview of the current state of these platforms is discussed below:

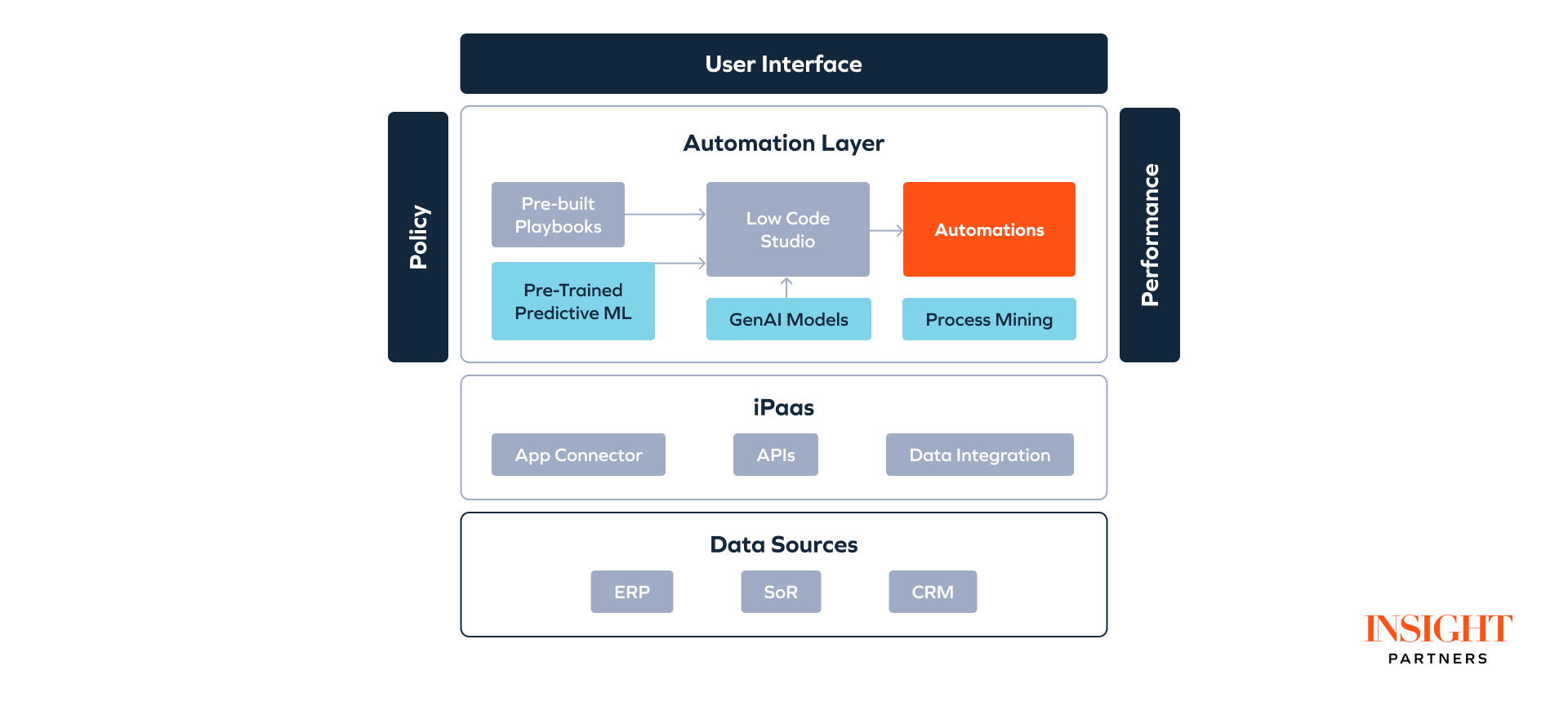

User Interface connects to a low-code studio where users can build, deploy, and verify automations. This interface is also used to monitor performance, track usage per policy, and even measure ROI for the automations they create.

iPaaS plays a critical role as the middleware, bringing together data from applications, data stores, and event streams to create an efficient interface to the automation layer.

Automation layer uses the template from the studio to either select from pre-built playbooks, a library of predictive ML models and tools, or execute new workflows. Some common use cases include:

- Extracting data from unstructured sources like images or emails and fill out a form.

- Observing humans (e.g. read screen, track keystrokes) to produce a repeatable workflow or suggest potential new automations.

- Extracting data from an inventory system and use ML models to create a forecast.

Incumbents are using genAI to both simplify user engagement and offer new workflows, such as:

- Type in a task such as “sales prospecting,” and the copilot translates the intent and searches the library of automations to offer users a starting point for their task.

- Create a form and update it with the appropriate fields based on pre-trained templates. Fill in the data extracted from various unstructured sources.

- Generate the “low” code to create automations based on NL description along with test cases to validate the output and a description of the workflow.

AI tools help these platforms build on their incumbent advantage (customers and playbooks) by helping accelerate time-to-value for users. Better UI/UX helps by reducing the consultative bootstrapping users typically need to get started in complex deployments. As LLM capabilities evolve, we can expect RPA and Task Automation to also grow in capability.

“In the future all human interaction with the digital world will be through AI agents.”

– Yann LeCun

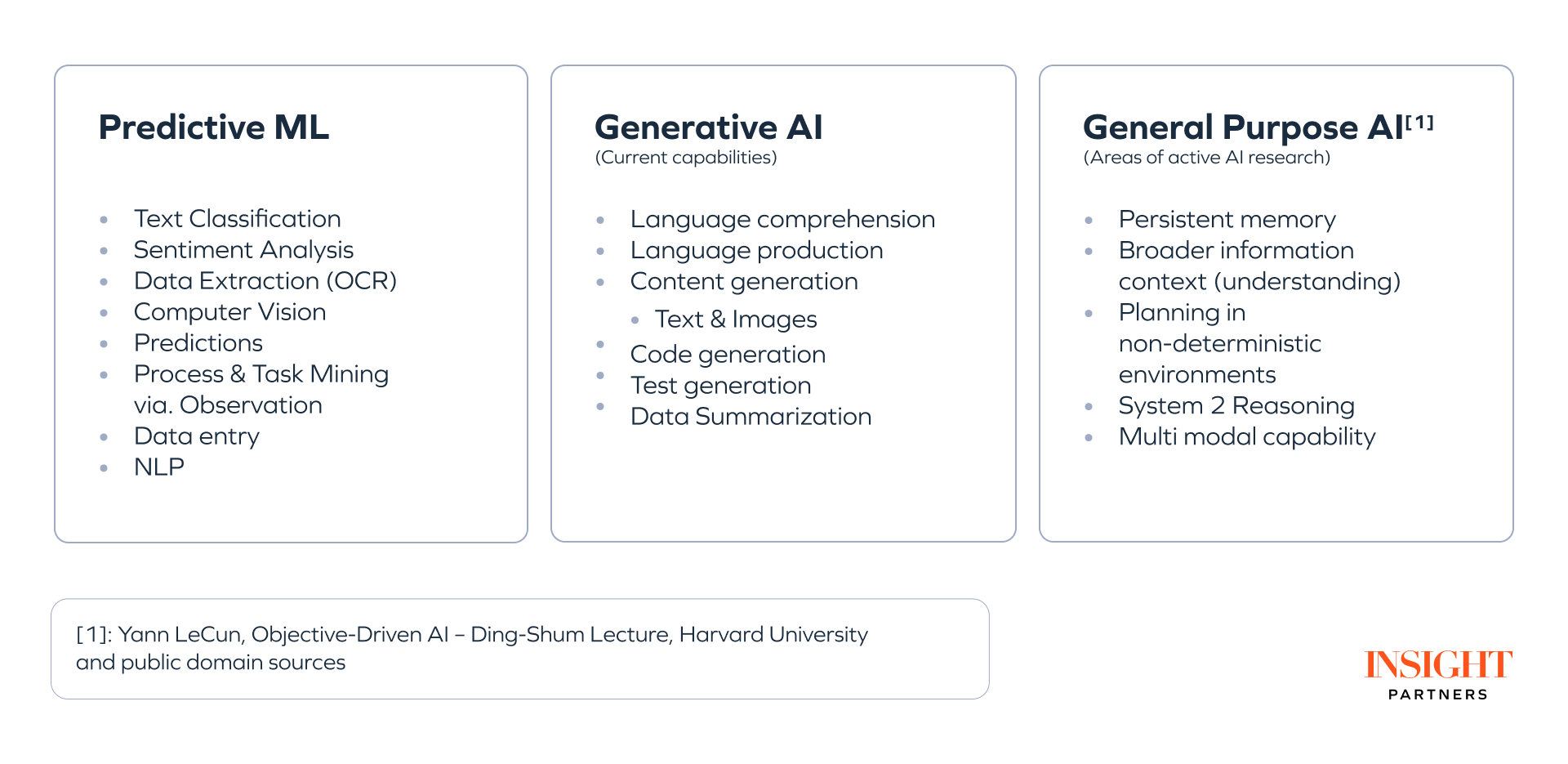

Agentic automation frameworks: Copilots/GPTs and Agents

It might be helpful to define terms commonly used to refer to genAI use cases in the market.

- Copilots are genAI-based interfaces to existing applications and platforms, which offer users simplified ways to discover and augment their existing features.

- Agents combine LLM capabilities with code, data sources, and user interfaces to execute workflows. There are a couple of approaches builders are working on:

- Simple wrappers around an LLM or an LLM trained for a specific task (code gen).

- A Mixture of Experts architecture with “scaffolding” to combine task-specific agents, pre-defined code/workflows, and external tools to reimagine applications or automate complex tasks.

- General Purpose Agents aim to automate any task by simply describing it. This remains a long-term goal for researchers requiring ongoing AI advancements — learn more in “References and further reading,” below.

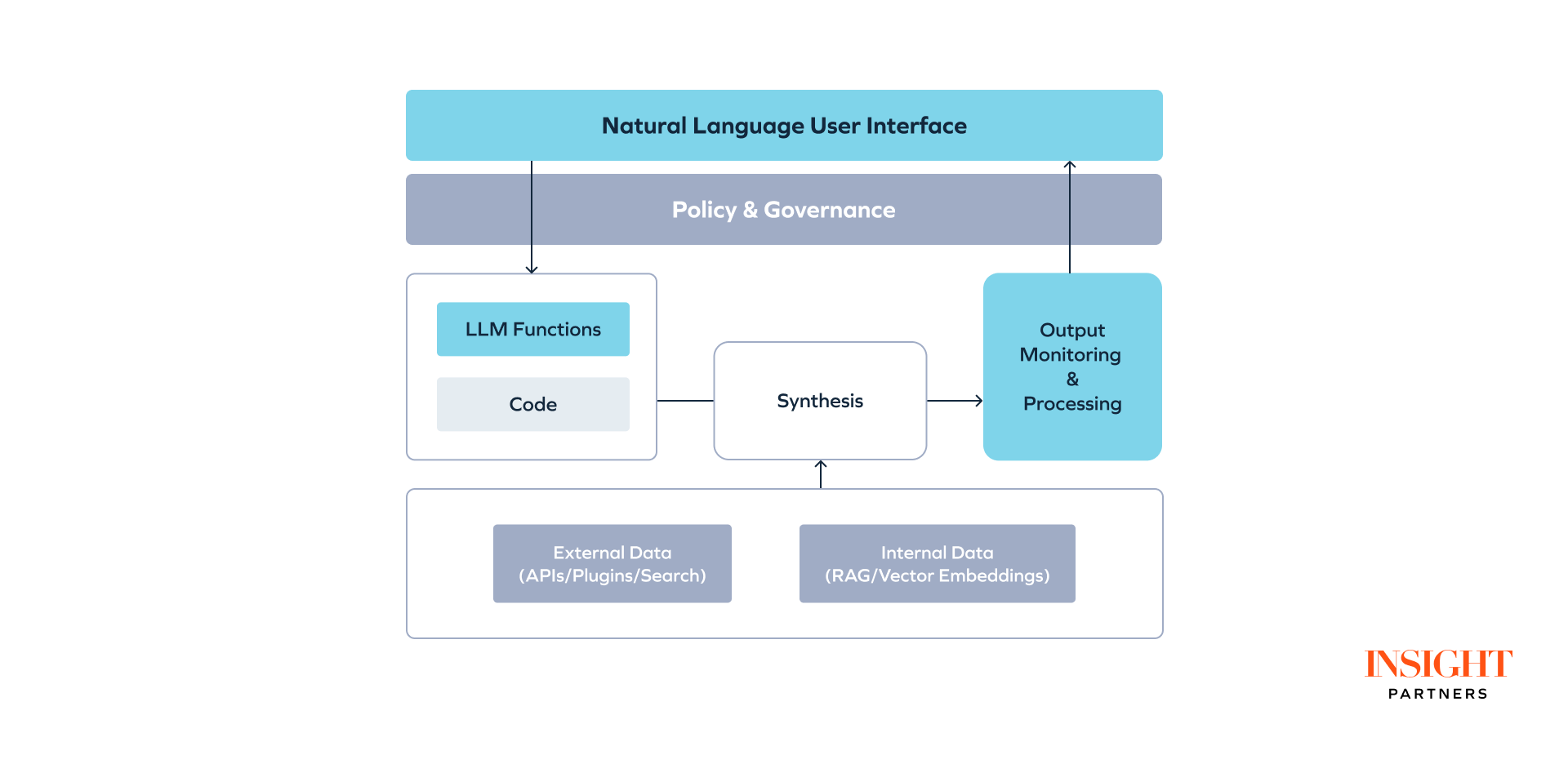

No-code agents/GPTs

AI Agents started as experiments, with builders such as Yohei Nakajima releasing projects like Baby AGI, which built on native LLM functionality to run simple automations. LLM providers now offer compelling no-code platforms with a library of plugins to external resources to build custom versions of the LLM. For a lot of simple tasks or one-off automations, this might be a fast way to get going.

In this approach, a no-code console allows users to provide a detailed description of the task or use few-shot prompting to guide the LLM in building a task agent. LLM providers now offer integrations with data sources and applications, enabling the Agent to leverage external data as part of its workflow. The agent can also use proprietary data, using techniques like Retrieval Augmented Generation (RAG) for accuracy. APIs bring in external tools like search.

Advanced Agents, as shown above, can be built around an LLM’s capabilities with glue code to bring together these various elements into a unified Agent. LLM providers are expected to continue launching new capabilities such as Agent modeling, collaboration, more tool access and prebuilt functions, reflection, safety guardrails, etc., making them powerful platforms for building Agents.

Mixture of Experts agent architectures

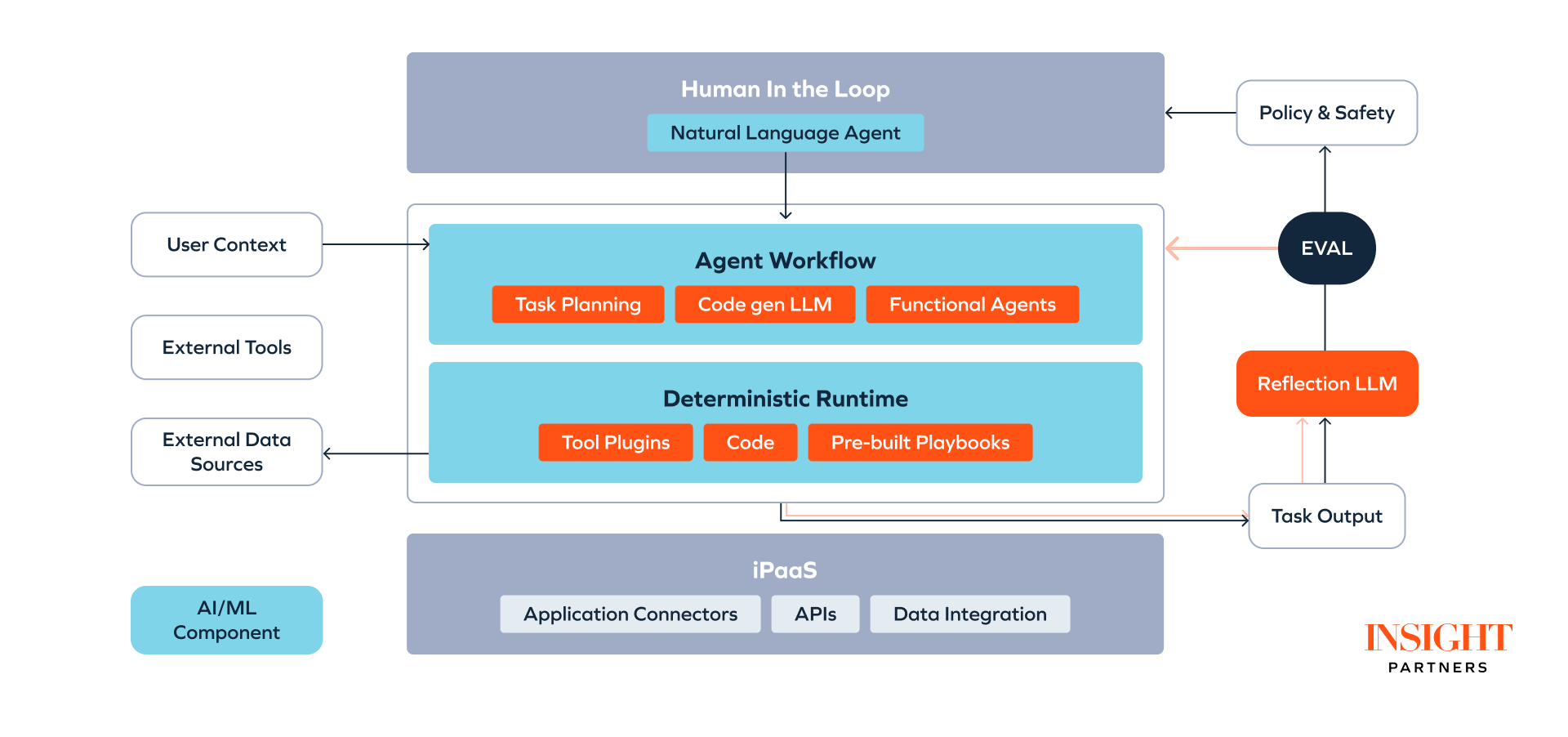

Builders like Bardeen, Imbue, and MultiOn are attacking the problem of delivering deterministic outcomes for complex workflows with a Mixture of Experts (MoE) Agent framework. The idea is to segment workflow into tasks assigned to specific Agents or functions and provide the Agent with a required “scaffolding,” including data, rich sets of tools, and interfaces. An approximation of the architecture is discussed below:

User Interface

The user-facing LLM enables a user to describe the task and leverage the context windows to provide relevant context such as few-shot examples. Newer UI approaches incorporate user context and interactivity, allowing the user to steer the Agent and refine its approach. This design enables “Human-in-the-loop” to certify the final output. This design enables a more fluent “Human-in-the-loop” interface to provide input and certify the final output.

Task Agents

The workflow can be decomposed into distinct tasks executed by LLM Agents, some of which are detailed below. This optimizes current LLM capabilities and enables flexibility in using Task agents for specific functions with clean abstractions and options to upgrade or reconstitute them in the future.

- Planning Agents today might propose a plan which breaks user intent into Task lists, which are approved by humans before routing for execution. This remains an active research area.

- Routing tasks map the task to the appropriate AI/ML Agent or predefined workflow.

- Functional agents are trained on specific tasks (genAI or predictive ML models).

- Codegen Agent translates tasks into code, such as SQL query, for specific tasks.

- Reflection LLM iterates on output to evaluate quality and refine final output. Platforms like Devin have demonstrated the effectiveness of this technique to improve output accuracy.

Deterministic runtime

To deliver a final output that is consistently right, composing the different outputs from various tasks on a deterministic runtime has proven to be good practice. For example, for finance use cases, the code gen LLM generates SQL queries executed in the runtime for precise data extraction.

The core design principle of the MoE architecture is to use AI/ML models only where needed and leverage predefined workflows/playbooks. LLMs are being used at design time with synthesis in a deterministic run time.

Agent-to-Human AI interfaces

As discussed, a human-in-the-loop interface is a key aspect of the architecture today. Builders are Grounding Agents in User Context with numerous approaches from inputs in the context window to designing Agents as browser extensions, allowing them to observe user behavior and capture context. LLM Plugins bring in external data or tools, a key aspect of giving Agents more skills. Finally, the Agent can use APIs to communicate with user platforms such as email, productivity, and communication tools, emulating typical human workflow.

Agent-to-Agent interfaces are an area of active research and development. In an MoE model, Task agents with different abilities will need to interact as discussed above. In time, we could envision Agents interacting with other Agents to accomplish tasks — extrapolating to an AI-enabled version of APIs connecting applications today.

Considerations for enterprises deploying automation

- Most enterprises already use a range of automation platforms, from classic RPA and Task automation platforms for specific tasks to home-grown solutions. Productivity from AI is still more hype than reality. Candidates for genAI-based automation need to undergo a clear-eyed cost/benefit analysis, as they will follow a similar maturation curve to previous approaches.

- “It’s the data, stupid.” AI agent performance is directly correlated with the quality and relevance of its training data. For a lot of enterprises, the journey begins with creating clean and focused data sets and pipelines that can ground the models.

- The LLM landscape is evolving rapidly with the impending release of GPT5/Llama3, which will reset the SOTA bar. At the same time, multiple models at GPT4-level performance are now available at attractive cost points. Enterprises now have models from different sources, at different cost-performance levels, to choose from based on use-case and functionality needs.

- At a platform level, the market has several choices. Incumbents are embedding AI or offering copilots to accelerate time-to-value for users. Startups/ScaleUps and LLM providers are taking an AI-native approach to reinvent vertical use cases or create new platforms to transform the cost, performance, and UX. The workflow and performance benchmarks should drive choices.

- LLMs today are very sensitive to prompting and slight variations could cause drift in the model output. Establishing clear measures of performance at the use cases level (versus model level) is key. The same goes for governance and data security. Human-in-the-loop is a fundamental feature of all AI deployments today.

Considerations for builders in automation

- Builders can adopt a “crawl, walk, run” approach with genAI in automation platforms. A deep understanding of the user, the use case, its performance benchmarks, and leveraging LLM as a tool matching its capabilities to the task is critical to building a differentiated solution.

- LLMs are primarily System 1 thinkers (reflexive responses based on trained data). Builders are using LLMs where needed for differentiated capabilities and pre-defined functions/playbooks, ML models where possible. Focused and targeted data sets are critical to grounding the models.

- For complex use cases — constant experimentation and the right “scaffolding” to incorporate user context, access to external tools and datasets, Reflection mechanisms, etc., are foundational aspects of a “Mix of Experts” Agent architecture.

- Simple text-based UI is a good first step. Builders are innovating by adding real-time interactivity and Multimodal UI to create more active engagement for users to track LLMs task lists, evaluate outputs, and offer active feedback to steer output.

- Bringing differentiated data sets with the right governance, as well as thinking through safety tradeoffs, security guardrails, and performance are important to avoid regulatory and compliance issues at deployment time in end user environments.

Generative AI Agentic use cases

In our conversations[10] with enterprises, there are various efforts underway in Agentic Automation. A few use cases are detailed below:

- Chief Data Officer at an F100 telecom: “We are building autonomous agent workflows to link tables and databases together, joining multiple data sources and then taking actions or making recommendations from the data.”

- VP of Data and AI at a global consulting firm is building agentic workflows to enable data analysts to capture insights from disparate spreadsheets.

- SVP Data and AI at an F500 construction and real estate firm is building an agentic application linking Palantir, OpenAI, and an internal copilot to select winning RFP bids from thousands of submissions.

- SVP at a large bank, “I see two active use cases for GenAI. One, coding copilots which are rolled out to all our engineers, we are seeing around 20%+ productivity improvement for senior engineers. We are looking forward to newer capabilities here. Two, chatting with documents with LLMs, RAG has significantly improved the way to preserve privacy while grounding the models. Chatbots have been an experimental roll out, and we are still perfecting this use case to account for safety and compliance.”

- Chief Digital Officer at a large bank, “Automation will take many forms in our estate. We have a large footprint of RPA, vertical automation platforms for ITSM, etc., and have built Agents on LLMs. We are actively experimenting with Agentic Automation architectures and learning as we go. Some incumbent vendors have shown great agility incorporating AI.”

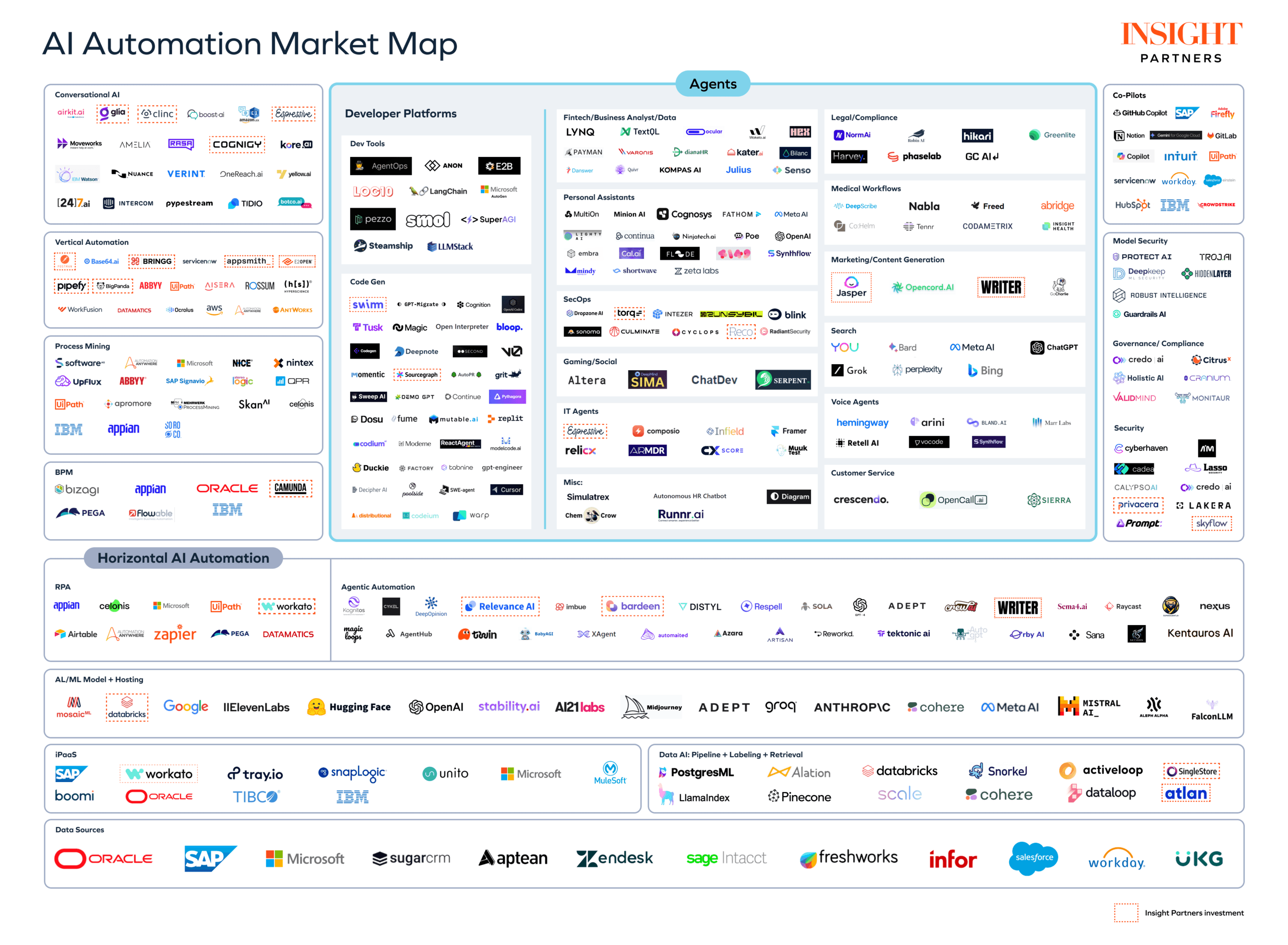

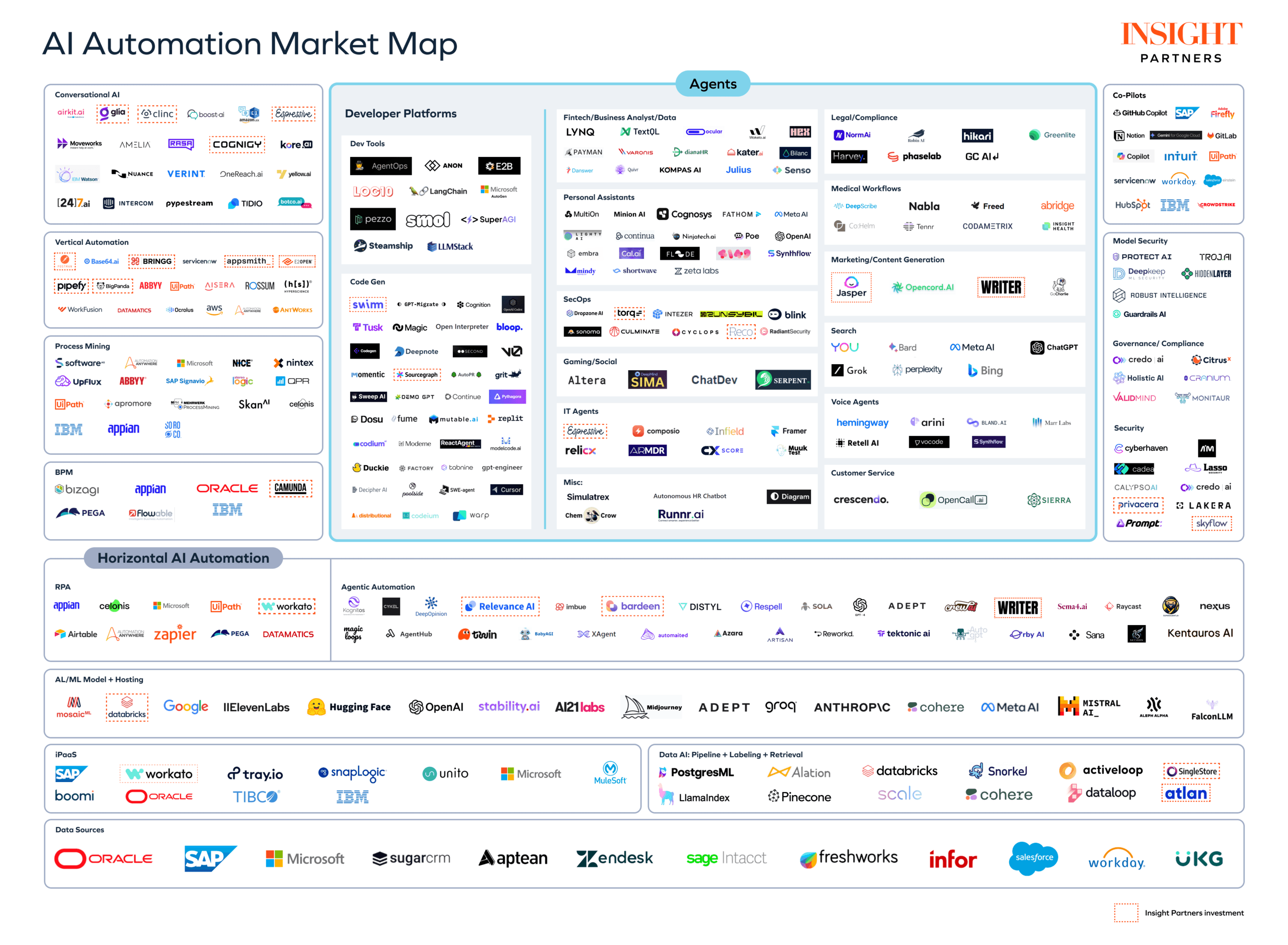

AI Automation market map

Click the market map to expand and explore.

The pace of innovation in AI is only accelerating. It is important to caveat that a lot of the approaches discussed here are in the experimental and early production stages.

As the world of Agents and Automation evolves, we are committed to actively tracking and updating the state of play in this space. Meanwhile, we welcome the opportunity to work with founders building in Agentic Automation, reimagining vertical applications, and differentiated infrastructure platforms and look forward to the feedback and dialogue with the community.

Note: Insight Partners has invested in Workato, Jasper, Writer, Bardeen, Big Panda, Torq.

References and further reading

[1] Yann LeCun, Objective Driven AI, Ding-Shum Lecture, Harvard University, 3-28-2024

[2] Andrew Ng, AI Agentic Workflows The Batch, Issue 241-245

[3] Evan Armstrong, What are AI Agents – And Who Profits From Them?

[4] Bardeen.ai, https://www.bardeen.ai/posts/are-ai-web-agents-a-gimmick

[5] Evaluating AI systems – Anthropic

[6] Unraveling Gemini: Multimodal LLMs on Vertex AI

[7] Devin: The AI software engineer with a game changing UI interface

[8] 101 real world Gen AI Use Cases : Google Cloud

[9] How people are really using Gen AI – HBR

[10] Insight Partners IGNITE Enterprise customer briefings

[11] The Landscape of Emerging AI Agent Architectures for Reasoning, Planning, and Tool Calling: A Survey – Tula Masterman, Sandi Besen, Mason Sawtell, Alex Chao

[12] PlanBench: An Extensible Benchmark for Evaluating Large Language Models on Planning and Reasoning about Change– Karthik Valmeekam, Matthew Marquez, Alberto Olmo, Sarath Sreedharan, Subbarao Kambhampati

[13] Market Map: Awesome AI agents: A list of autonomous agents, Agent Database, Staf.ai, Generative AI’s Act Two, Gen AI Infra Stack

[14] AIOS: LLM Agent Operating System – Kai Mei, Zelong Li, Shuyuan Xu, Ruosong Ye, Yingqiang Ge, Yongfeng Zhang

[15] Generative Agents: Interactive Simulacra of Human Behavior, Park etal.